Capturing user intentions and turning them into actions.

Brain-Computer Interfaces (BCIs) are playing an increasingly important role in a broad spectrum of applications in health, industry, education, and entertainment. We present a novel, mobile and non-invasive BCI for advanced robot control that is based on a brain imaging method known as functional near-infrared spectroscopy (fNIRS). This BCI is based on the concept of “automated autonomous intention execution” (AutInEx), that is, the automated execution of possibly very complex actions and action sequences intended by a human through an autonomous robot.

Goal

Develop a reliable framework for a real-time brain-robot interface (BRI) based on fNIRS.

Progress

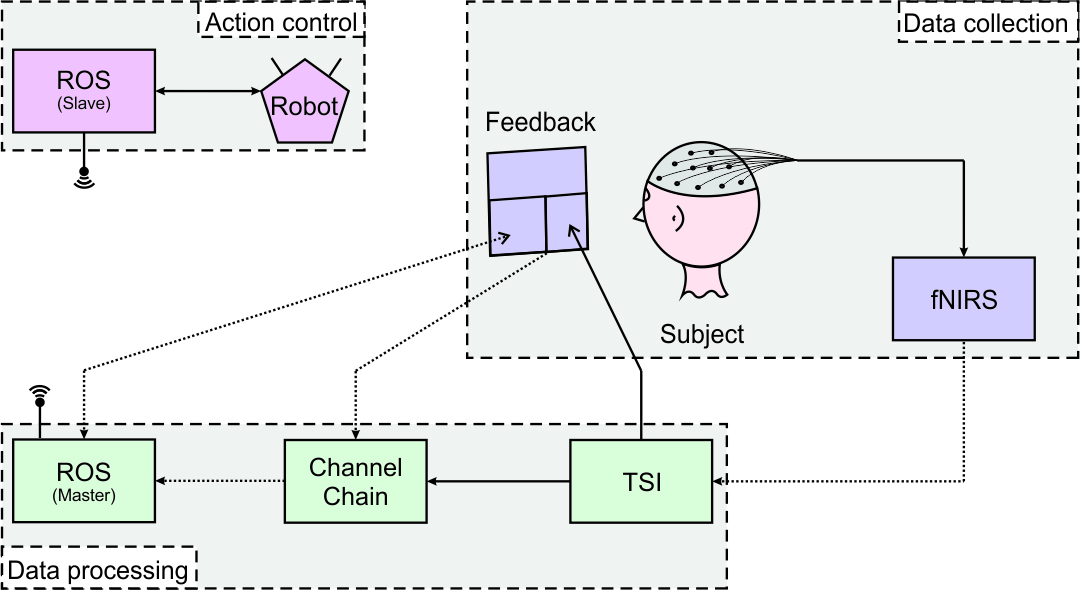

The work began with the design of the interface architecture. This architecture was intended to build-up the connectivity between the logically and physically separable procedures of Data collection, Data processing, and Action control. In the Data collection, the user of the BRI had to be recorded, according to a prepared acquisition protocol, whilst being informed about the recording state. In the Action control, the robot should be acting autonomously in the given environment and be capable executing the user-specified tasks. Finally, the Data processing should glue the other two procedures together in such a way that the brain data recorded from the user is correctly and in a timely manner mapped to the tasks the robot would perform. The initial architecture was as shown below:

The architecture above was first tested in an offline setting – i.e. by simulating the recording of a real subject with the stream of the prerecorded data. Thus, originally, the Data acquisition block was entirelly replaced by a set of raw data logs. An offline version of TurboSatori (TSI) was used to load the logs, do the data preprocessing and pass it further for the deeper analysis. The offline version of ChannelChain was used to perform a task/encoding mapping between the experiment protocol the user had to follow and the available robotic tasks, then to do classification of th e data received from TSI and output the decoded task selection to Robot Operating System (ROS) controller. The ROS controller was aimed to manage the robot task performance.

Once the offline tests indicated that the framework was functioning correctly, the setup was transferred to the online setting. The classification process was improved and the user feedback software RoboFeedback was introduced. The RoboFeedback allows to monitor and control the robot placed in the ROS environment. This module is essential in bridging the gap between the user, who might not even see the task being performed, and the robot.

Then, a few recording experiments were done using the designed real-time framework. For better impression of what the recording session is see this conceptual video:

Currently, the framework is being improved in a number of ways:

- Support of multiple robots

- Support of multiple signal sources

- Experiment protocol design optimization

- Subject stimulation

- Experiment sequencing

- Simplification and generalization of the overall framework design

- More advanced and flexible data (pre)processing

- Grid computing for real-time experiment continuity

We are currently recording volunteers to evaluate the improved framework. If you are willing to participate, don’t hesitate contact us. 🙂

Contact

Kirill Tumanov (k.tumanov@maastrichtuniversity.nl)

Publications

- B. Sorger, K.I. Tumanov, A. Benitez Andonegui, M. Luhrs, H. Boeijkens, G. Weiss, R. Goebel, R. Möckel. An fNIRS-based brain-machine interface for remote robot control. Real-time Functional Imaging and Neurofeedback Conference (rtFIN’17), P125, 2017.

[Poster] - K.I. Tumanov, R. Goebel, R. Möckel, B. Sorger, G. Weiss. fNIRS-based BCI for Robot Control (Demonstration). 14th International Conference on Autonomous Agents and Multiagent Systems (AAMAS’2015), 1953-1954, 2015.

[Paper] [Poster]

[Poster]